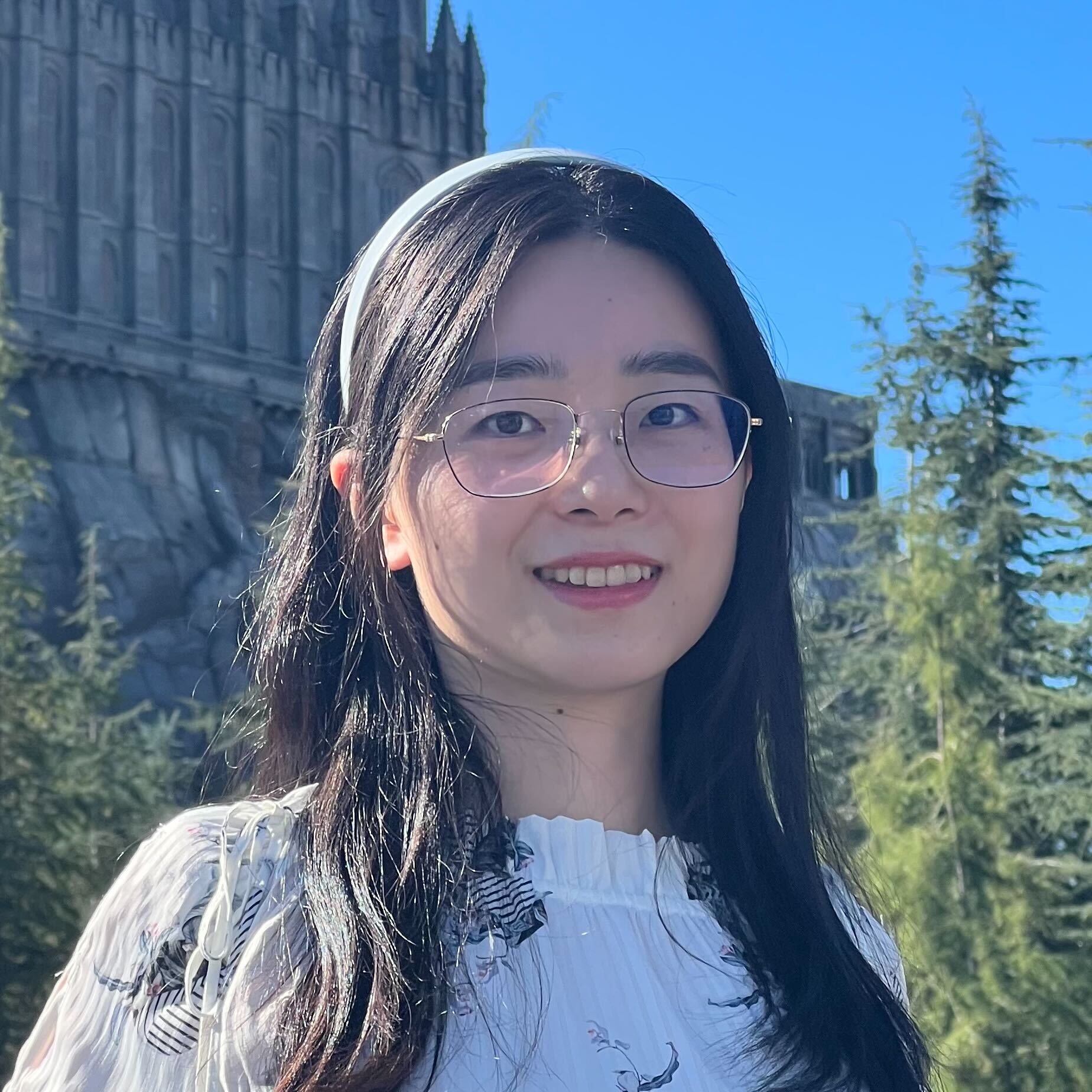

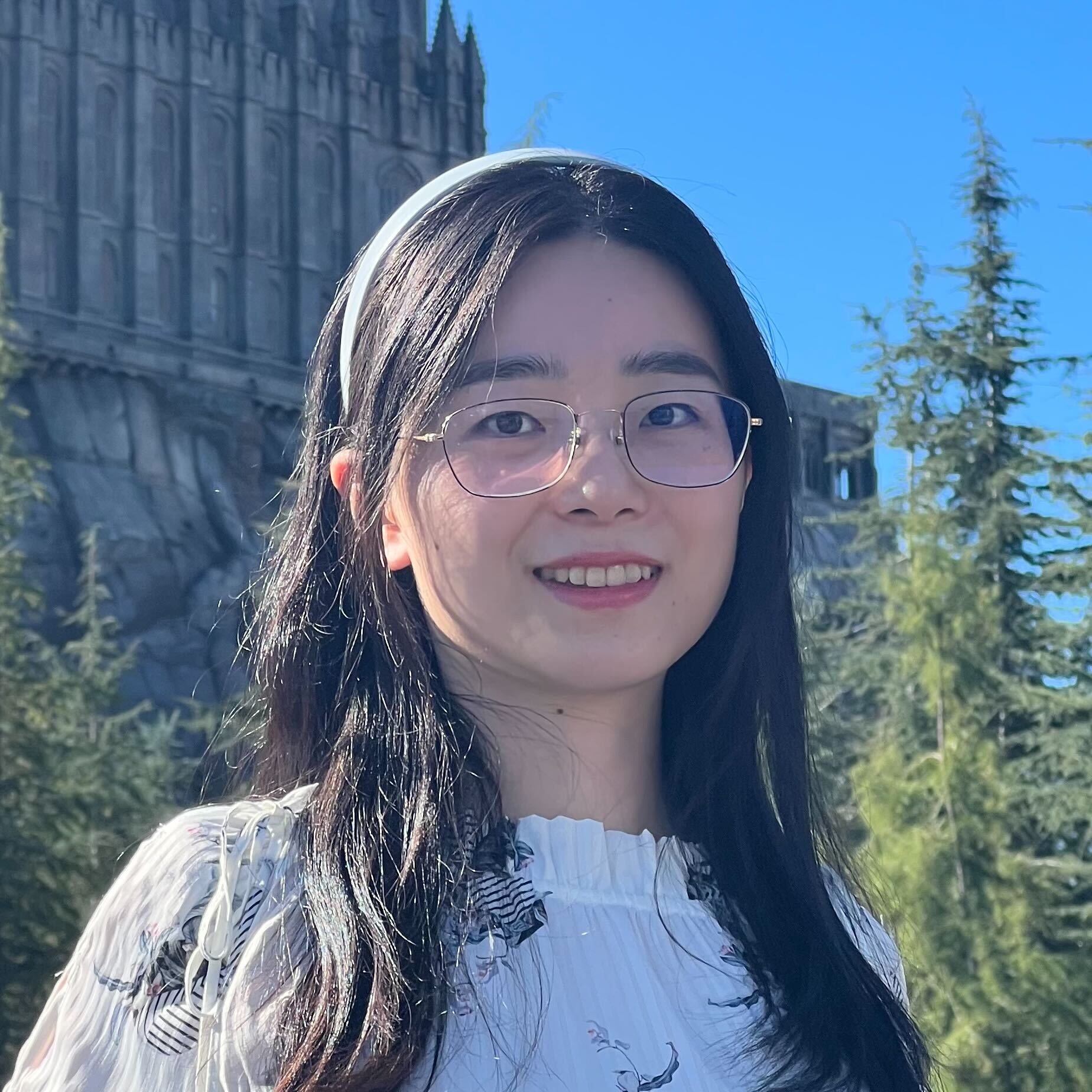

Haiyue Ma

Ph.D. Candidate, AI + Hardware Architecture, Princeton University

Ph.D. Candidate, AI + Hardware Architecture, Princeton University

I am a Ph.D. candidate in Electrical and Computer Engineering at Princeton University, advised by Prof. David Wentzlaff. My research lies at the intersection of GPU systems architecture, hardware/software co-design, and microarchitecture, with a focus on modeling performance bottlenecks in large-scale machine learning workloads.

Most recently, I interned at NVIDIA (Summer 2024 & Summer 2025), where I worked on GPU performance modeling for Mixture-of-Experts (MoE) inference and on overlapping GPU kernels in FlashAttention-based LLM training.

Before starting my Ph.D., I spent three years at NVIDIA Shanghai, contributing to the Ampere and Orin architectures. I received my B.S. in Electrical Engineering from Washington University in St. Louis in 2018.

I have broader interests in AI's impact on humanity, neuroscience (and their similarities to neural networks), and physics. I am actively incorporating my new insights in my research and sci-fi writing. Read my Research-and-Personal-Interest statement.

My research focuses on performance modeling and scheduling of GPU-based systems for machine learning, as well as microarchitectural techniques for energy-efficient computing.

You can download my full CV here (updated Dec. 2025).

Email: hm1@princeton.edu

LinkedIn: My LinkedIn Profile